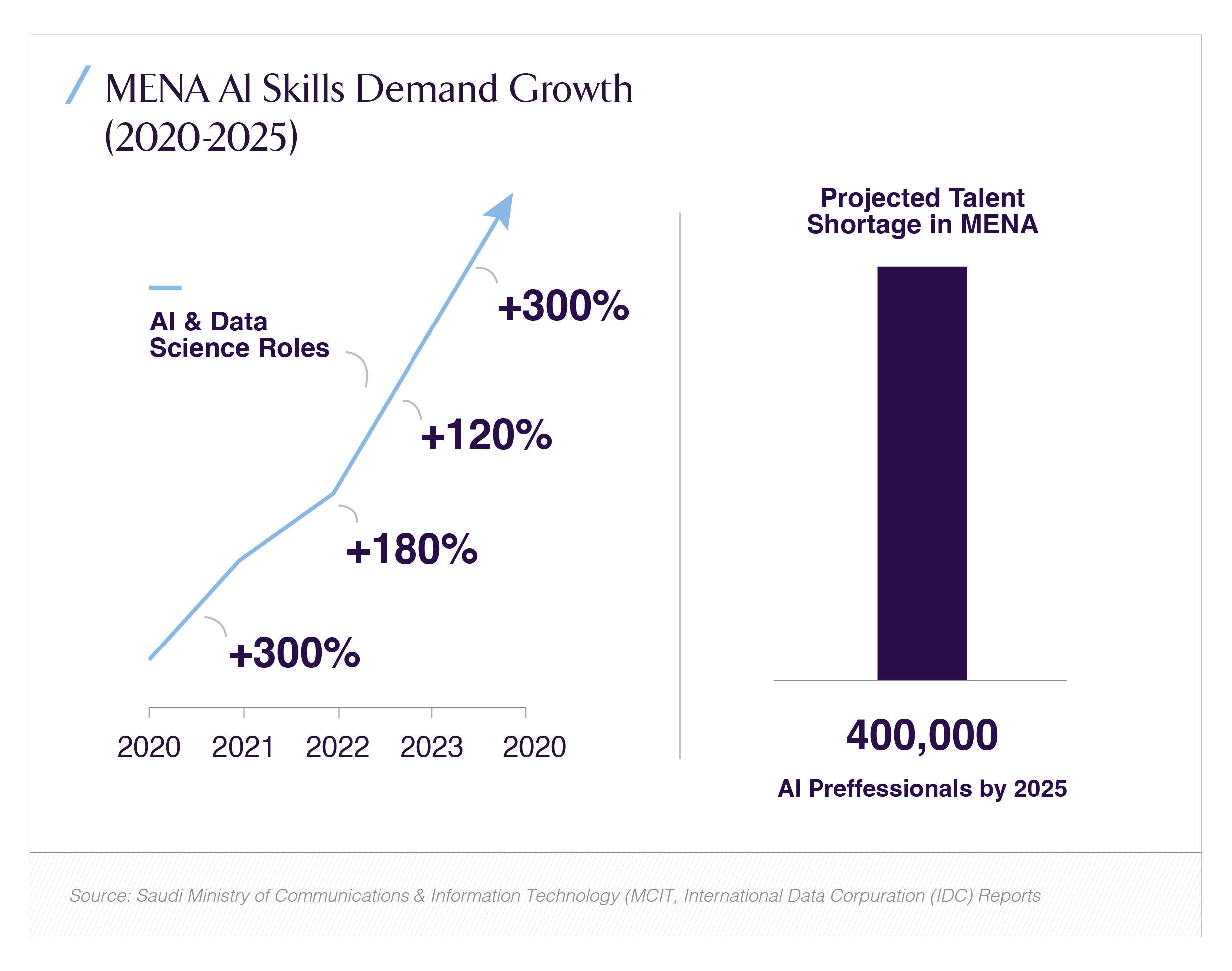

When a government of any size decides to train six-figure cohorts in a strategic technology, it’s not just an education program it’s a national industrial policy in action. In October, Saudi Arabia’s Ministry of Communications & Information Technology (MCIT) announced a program to certify 100,000 citizens in AI and data skills, marking a significant step in a broader initiative to equip one million Saudis with AI capabilities under the country’s national initiatives. The partnership announced with analytics firm Incorta, along with related programs from MCIT such as “Mostaqbali,” signals an acceleration of Saudi plans to build both human capital and hard infrastructure for the AI era.

This article digs into the practical mechanics and strategic logic of that push: what the curriculum will likely need to look like, how public–private partnerships (PPPs) fit in, what certification benchmarks should mean in practice, what ROI the kingdom can expect, the risk that trained talent could emigrate, and how these training efforts align with Humain the PIF-backed AI venture building cloud and GPU capacity in the kingdom.

Why 100,000 matters and why it’s tactical, not symbolic

Large headline numbers serve two related functions: they mobilize resources and set expectations. A 100,000-person certification cohort is big enough to nudge labor markets and small enough to be delivered in a single program cycle ideal for proving a model that can then scale to the one-million target. The announcement also dovetails with other strands of national policy (education, labor, investment) that together create demand for certified graduates, reducing the risk that training exists in a policy vacuum.

That said, the real impact depends on what certificates certify. A certificate that signals years of mastery is not the same as a short online micro-credential. The architecture of the program levels, assessments, employer buy-in — will determine whether the certificate becomes a passport to an AI job or just a line on a CV.

Curriculum design: stacked, applied, and outcome-driven

Effective national upskilling programs tend to be modular, competency-based, and anchored to employer needs. A pragmatic curriculum for a national 100k program should include at least three stacked tracks:

- Foundations (for non-technical professionals) AI literacy, ethics, data-awareness, and use-case ideation so managers and domain experts can integrate AI tools.

- Practitioner (for analysts and developers) data engineering basics, ML fundamentals, model evaluation, MLOps primers, and hands-on projects with real datasets.

- Specialist (for engineers and researchers) deep learning, model optimization, large-model fine-tuning, GPU-aware engineering, and systems skills (distributed training, inference cost-control).

Each track must be heavily project-based: capstone assignments co-designed with employer partners and validated by real-world KPIs (e.g., a deployed classifier that reduces a process time by X%). Assessment models should mix automated tests with human-reviewed projects so certificates map to demonstrable outcomes rather than rote knowledge. This approach also allows stackable credentials (badges → micro-certificates → full certifications) which helps retention and lifelong learning.

Public–private partnerships: why companies like Incorta matter

MCIT’s choice to partner with Incorta and to publish the program on national e-learning platforms is smart for several reasons. Cloud and analytics vendors bring ready-built curricula, labs, datasets, and often employer networks. They can scale content delivery, supply sandboxed environments, and offer mentorship from practitioners. Incorta’s public write-ups indicate a program intent on practical, data-driven skills that align with enterprise analytics needs not just academic theory.

But PPPs must be governed properly: the public partner should retain curriculum oversight, ensure open-access elements for equitable participation, and mandate employer engagement in project evaluation. Otherwise, commercial incentives may skew training toward proprietary stacks rather than transferable skills.

Certification benchmarks: what good looks like

A credible national certification program should meet three criteria:

- Rigorous competency validation tests must evaluate applied skills (project deployment, code review, data pipelines), not just multiple-choice theory.

- Employer recognition leading firms should accept the certification as evidence of employability; otherwise, the credential will have limited labor-market value.

- Transparency and portability credential metadata (skills, assessment types, pass rates) should be public so employers and learners can make informed choices.

MCIT can accelerate employer recognition by co-creating assessment rubrics with large national employers (energy, finance, health), guaranteeing internships or placement pathways for top performers, and publishing placement statistics for each cohort. This closes the loop between training outputs and tangible job outcomes.

Expected ROI both near-term and structural

Return on investment can be shown across multiple horizons:

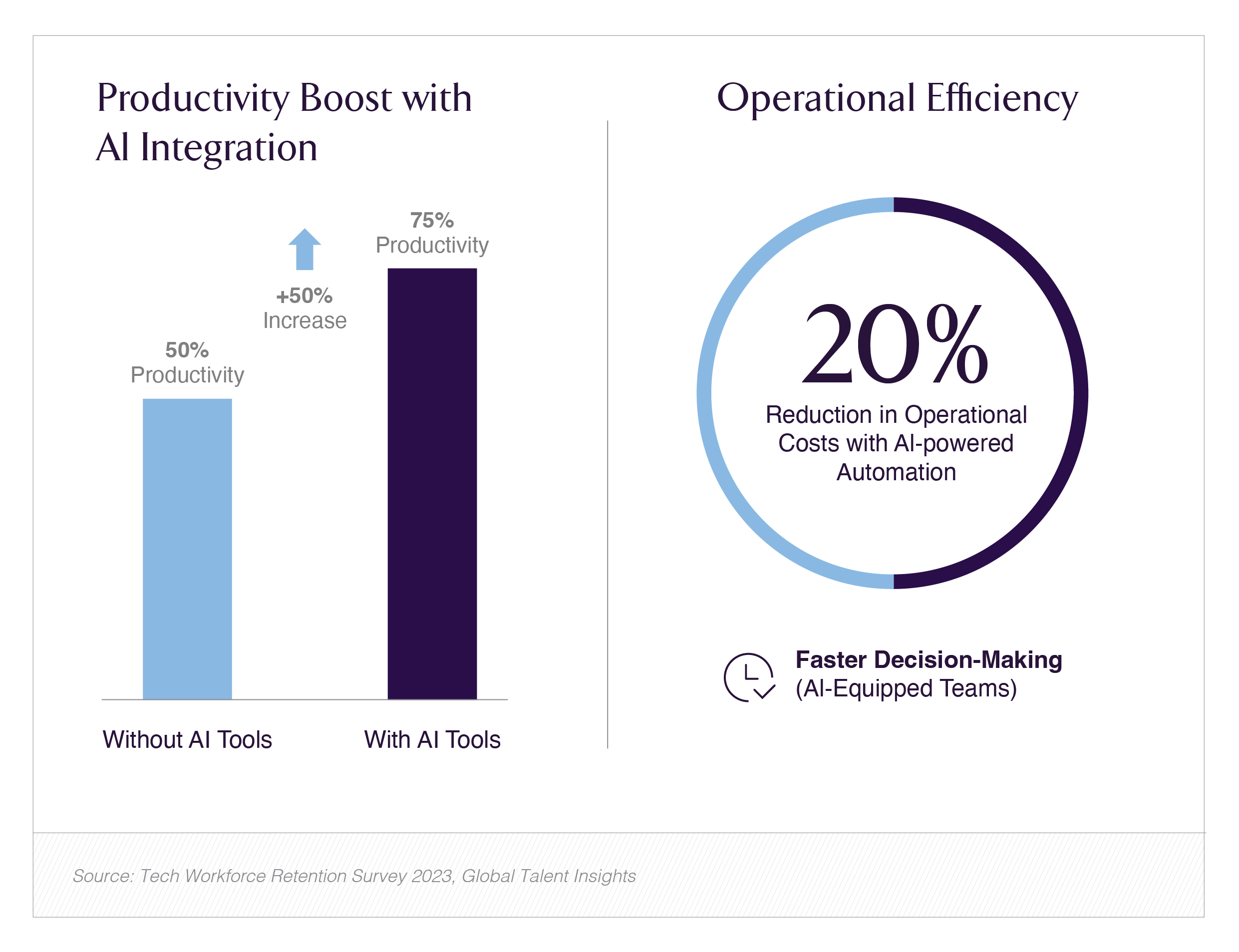

- Short term (1–2 years): Productivity gains from AI-literate staff adopting automation tools; reduced outsourcing for analytics tasks; faster internal prototyping cycles.

- Medium term (3–5 years): New domestic AI startups, lower tech import costs, higher-value services exports.

- Long term (5–10 years): Talent availability that underpins capital investments such as Humain’s planned data centers and GPU farms making the kingdom not just a market for AI but a supplier of AI services and models.

Because Humain and other large infrastructure projects will create local compute demand, upskilled talent lowers the total cost of deploying those assets (local engineers, localized MLOps) and improves capture of value from AI-driven projects.

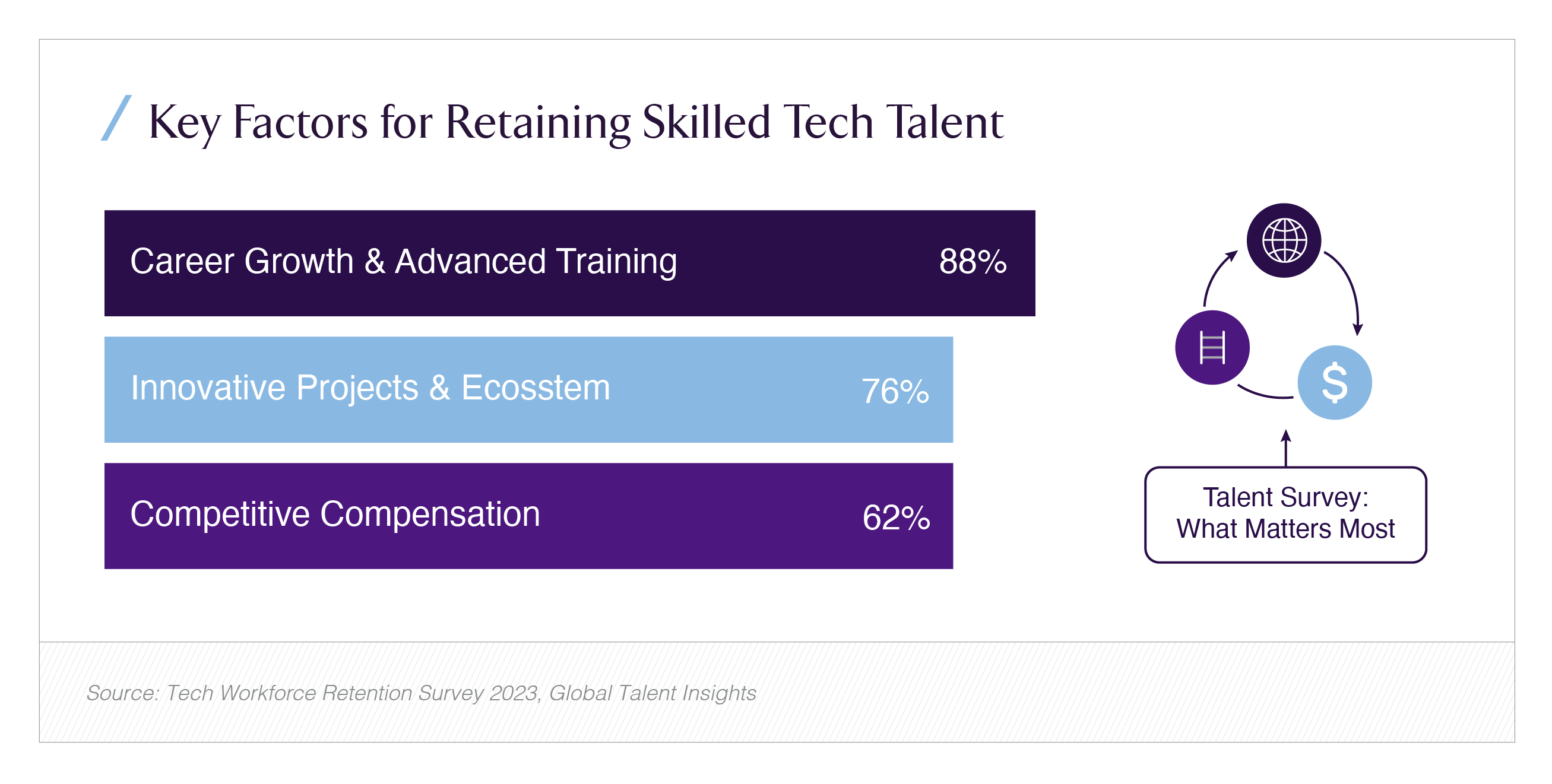

The brain-drain risk and how to reduce it

Training more workers inevitably increases their global marketability. A classic risk: the very graduates the kingdom needs become attractive to foreign employers. Saudi Arabia can reduce this risk by coupling training with:

- Competitive domestic opportunities: incentives for startups, procurement policies that favor local vendors, and partnerships that guarantee job pipelines (internships, fellowships).

- Mobility advantages at home: fast-tracked visas/permits for international experts to work in Saudi AI projects, creating a denser local ecosystem.

- Retention hooks: scholarships tied to service commitments, equity-share programs in national AI startups, and meaningful career ladders.

Another lever is to make training context-specific: graduates trained on local datasets (energy, water, Arabic-language NLP) develop domain expertise that is directly valuable to national projects and less portable elsewhere.

Aligning workforce training with Humain’s computing ambitions

Humain the PIF-backed AI firm building significant data-center capacity and aiming to host large NVIDIA GPU deployments is a crucial demand-side partner for upskilling. Humain’s plans for multi-hundred-megawatt capacity and partnerships with chipmakers mean Saudi Arabia is not only training people but also creating places for them to work on real, large-scale AI systems. Those compute projects require engineers versed in distributed training, GPU orchestration, data governance, and secure cloud operations precisely the higher-level skills the specialist track should deliver.

The virtuous cycle is clear: training produces talent → talent enables local AI projects and data centers → local projects validate and retain talent → a domestic AI industrial base grows.

Critical risks and practical mitigations

No national program is risk-free. The biggest operational risks include:

- Quality dilution: pushing quantity over rigor. Mitigation: phased rollouts, strong proctoring, and employer-validated capstones.

- Inequitable access: urban vs rural, men vs women. Mitigation: mobile-friendly, Arabic-language content, regional learning hubs, and targeted outreach.

- Vendor lock-in: curricula tied too tightly to a single vendor’s stack. Mitigation: require open-skill outcomes and multiple-platform interoperability.

- Mismatch with employer demand: teach skills employers don’t need. Mitigation: continuous labor market scans and advisory boards with major sectors.

How success should be measured

Beyond crude completion numbers, success metrics should include:

- Placement rate into relevant roles within 6–12 months.

- Employer satisfaction with certified hires.

- Project impact of capstones (measured in saved hours, revenue uplift, or improved service metrics).

- Retention in-country at 1- and 3-year marks.

- Diversity metrics across gender, region, and socioeconomic background.

Public reporting on these KPIs will hold the program accountable and attract further private investment.

A national experiment with global stakes

Saudi Arabia’s plan to certify 100,000 citizens in AI as part of a wider push to empower one million people is more than workforce development. It’s an attempt to industrialize synthetic intelligence by synchronizing human capital formation with heavy infrastructure investments (data centers, chips, cloud partnerships) and policy levers. With players such as Incorta delivering applied analytics curricula and Humain building the compute backbone, the kingdom is attempting an integrated stack from education to deployment. If executed well, this could accelerate domestic innovation and lower dependence on imported talent and services; if done poorly, it could generate certificates without real economic change.

Either way, the initiative is an instructive case study for any nation trying to convert AI ambition into domestic capability: measurable outcomes, employer buy-in, aligned infrastructure, and an honest assessment of retention risks will determine whether the 100,000 figure becomes a milestone or merely a headline.